The secret to AI’s learning ability lies in Occam’s razor

Discover how deep neural networks leverage simplicity bias to achieve remarkable generalization, solving key mysteries in machine learning.

Deep neural networks defy conventional learning theories by leveraging a built-in simplicity bias, favoring simpler solutions for better generalization. (CREDIT: CC BY-SA 4.0)

Deep neural networks (DNNs) have revolutionized machine learning, yet their success defies classical expectations. They perform exceptionally well even in overparameterized scenarios, where models contain far more parameters than data points. This seemingly paradoxical ability to generalize effectively has puzzled researchers for decades.

A new study from Oxford University, published in Nature Communications, sheds light on this phenomenon by uncovering a remarkable bias intrinsic to DNNs: a built-in form of Occam's razor that favors simplicity.

DNNs excel in supervised learning, where they predict labels for unseen data after being trained on labeled examples. Training involves minimizing a loss function, which measures the difference between the network's predictions and the true labels.

Despite their capacity to fit almost any data pattern, DNNs tend to converge on solutions that generalize well—solutions that work not only on training data but also on new, unseen inputs. This remarkable ability, the study argues, arises from an inherent preference for simpler functions.

Researchers examined the prior probabilities of functions expressed by DNNs. The prior represents the likelihood that a randomly initialized DNN maps inputs to a specific output pattern. For Boolean functions—which yield true or false results—the study found an exponential bias toward functions with low descriptive complexity. This bias aligns with algorithmic information theory, where simpler functions have shorter descriptions.

Professor Ard Louis of Oxford University explains, “While we knew DNNs rely on some form of inductive bias toward simplicity, the precise nature of this razor remained elusive. Our work reveals that DNNs’ bias counteracts the exponential growth in possible complex solutions, enabling them to generalize effectively.”

The team used Lempel-Ziv (LZ) complexity measures to quantify function simplicity. Simpler functions are those with shorter binary representations, and DNNs demonstrated a clear preference for these functions over more complex ones. This simplicity bias ensures DNNs avoid overfitting by rejecting the majority of complex functions that fit training data but fail on unseen inputs.

To test how this bias influences performance, the researchers altered the activation functions—mathematical mechanisms that decide whether a neuron “fires.” They observed that small changes to the simplicity bias impaired the network’s ability to generalize, even on simple Boolean functions.

For more complex targets, performance decreased further, sometimes approaching random guessing. This finding underscores how critical the specific form of simplicity bias is to a DNN’s success.

Related Stories

The study further explored how network performance varies with data complexity. Boolean function classification served as a model system to investigate this relationship. Fully connected networks (FCNs) were trained on subsets of Boolean inputs and tested on unseen data.

The results demonstrated that networks with a stronger simplicity bias performed significantly better on simpler target functions. In contrast, weaker biases led to poorer generalization, particularly for simple data. These findings highlight the importance of a finely tuned bias in enabling networks to balance flexibility with robust performance.

The interplay between structured data and simplicity bias is crucial. Real-world data often exhibits inherent patterns and regularities, aligning with DNNs’ preference for simple solutions. This natural synergy helps networks identify meaningful structures without overfitting. However, when data complexity increases or lacks clear patterns, DNNs struggle. Their performance drops, highlighting the limits of relying solely on simplicity bias.

This relationship between simplicity and data structure raises important questions about the adaptability of DNNs. For instance, what happens when training data deviates significantly from expected patterns?

The study found that even slight deviations in data structure can disrupt the network’s ability to generalize. These challenges underscore the need for further research into how networks can adapt their inductive biases to accommodate a wider range of data complexities.

The findings suggest intriguing parallels between DNNs and natural systems. The bias observed in DNNs mirrors the simplicity bias in evolutionary processes, where symmetry and regularity often emerge in biological structures.

Professor Louis highlights the potential for cross-disciplinary insights: “Our results hint at deep connections between learning algorithms and the fundamental principles of nature. Understanding these parallels could open new avenues for research in both fields.”

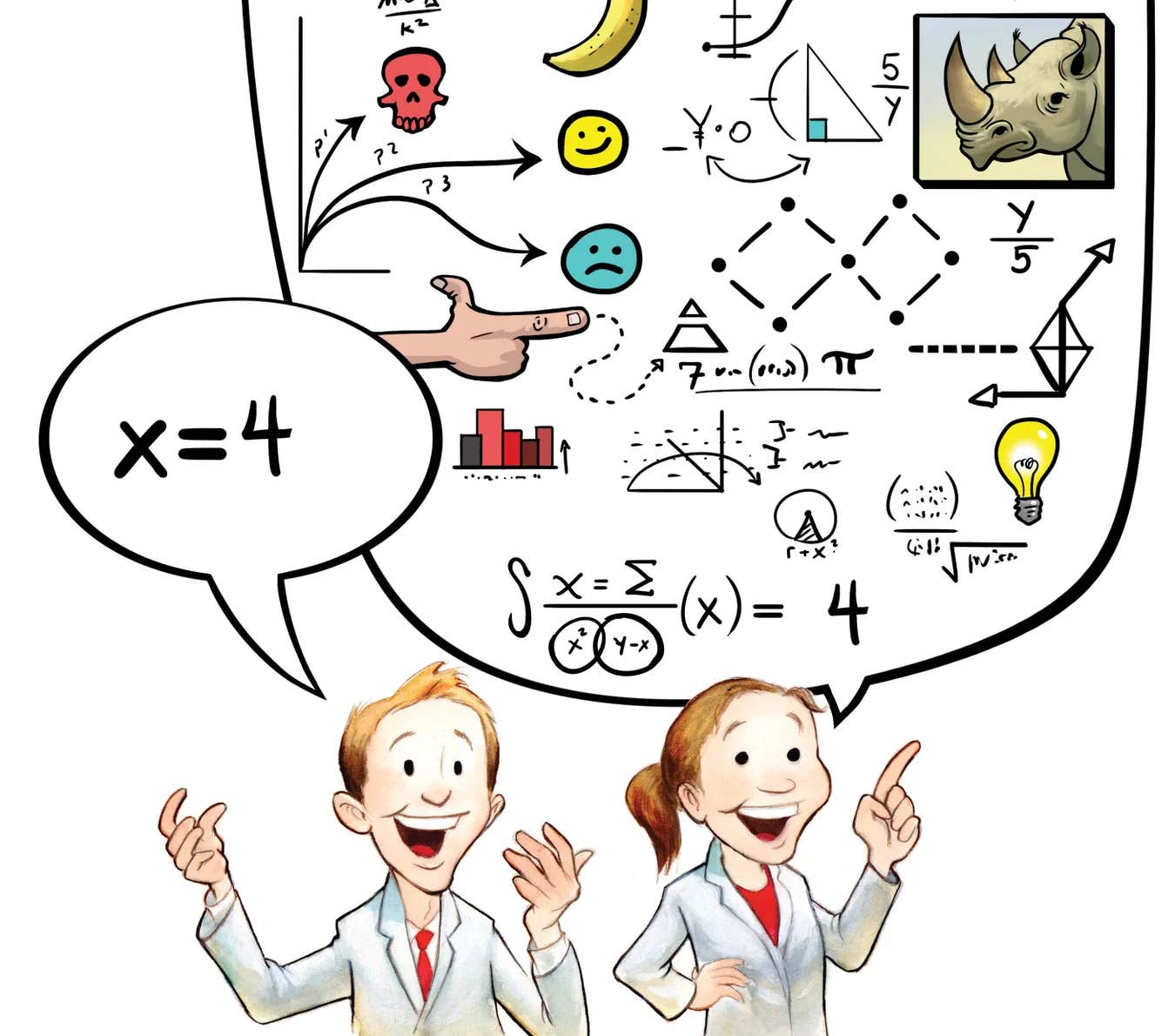

This study also addresses a long-standing debate in statistical learning theory. Conventional wisdom holds that overparameterized models risk overfitting, as famously articulated by John von Neumann’s quip about fitting an elephant with four parameters and making it wiggle its trunk with five.

Yet, DNNs defy this expectation, achieving high accuracy even with enormous capacity. The study’s insights into simplicity bias provide a compelling explanation for this phenomenon.

Moreover, the study highlights a phenomenon known as double descent. In classical learning theory, performance typically peaks at an intermediate model complexity and degrades as complexity increases further.

However, DNNs exhibit a second improvement in performance as their capacity continues to grow, defying conventional expectations. This double descent phenomenon underscores how DNNs leverage their vast parameter space to align with structured data, further enhancing their generalization abilities.

As artificial intelligence continues to integrate into critical domains, from healthcare to autonomous vehicles, understanding how DNNs arrive at their decisions becomes increasingly important. The inherent simplicity bias not only aids generalization but also offers a starting point for “opening the black box” of AI systems. By clarifying the principles guiding DNN decision-making, researchers can enhance transparency and accountability in AI applications.

However, challenges remain. While simplicity explains much of DNNs’ success, the study acknowledges that specific architectural and training choices also influence performance. Further research is needed to uncover additional biases and refine models for diverse applications.

For example, understanding how variations in activation functions or training algorithms affect bias could provide deeper insights into the mechanisms driving DNN performance.

The study’s implications extend beyond artificial intelligence. By drawing parallels between DNNs and natural systems, researchers can explore how principles like simplicity bias manifest in other domains.

For instance, evolutionary biology and neuroscience may benefit from these insights, shedding light on how natural systems balance complexity and simplicity to achieve optimal functionality.

In summary, this groundbreaking study reveals that the remarkable performance of DNNs stems from a built-in simplicity bias that mirrors principles found in nature. This discovery not only deepens our understanding of machine learning but also opens pathways for innovations in AI and beyond.

As DNNs continue to shape the future of technology, uncovering the intricacies of their inductive biases will be crucial for unlocking their full potential.

Note: Materials provided above by The Brighter Side of News. Content may be edited for style and length.

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Joshua Shavit

Science & Technology Writer | AI and Robotics Reporter

Joshua Shavit is a Los Angeles-based science and technology writer with a passion for exploring the breakthroughs shaping the future. As a contributor to The Brighter Side of News, he focuses on positive and transformative advancements in AI, technology, physics, engineering, robotics and space science. Joshua is currently working towards a Bachelor of Science in Business Administration at the University of California, Berkeley. He combines his academic background with a talent for storytelling, making complex scientific discoveries engaging and accessible. His work highlights the innovators behind the ideas, bringing readers closer to the people driving progress.