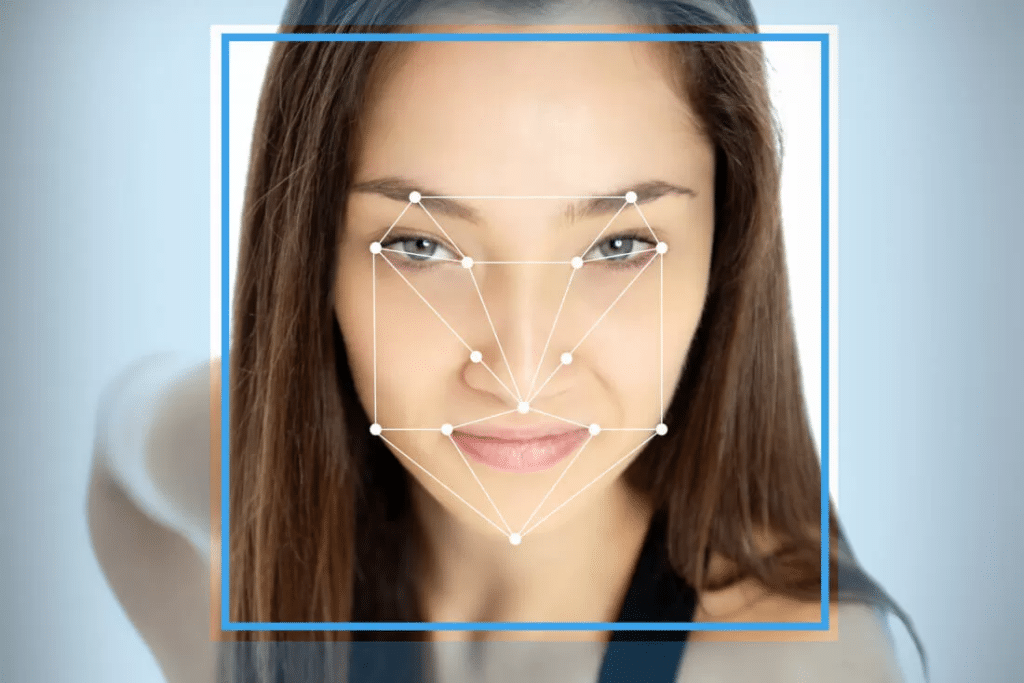

New AI software can tell you where to apply makeup to fool facial recognition

Researchers have found a way to thwart facial recognition cameras using certain software-generated patterns and natural makeup techniques

[Sept 23, 2021: Katya Pivcevic]

Renderings have been released of an eye-opening Las Vegas resort that takes the form of a giant moon (CREDIT: Moon World Resorts)

Researchers at Israeli Ben-Gurion University of the Negev have found a way to thwart facial recognition cameras using certain software-generated patterns and natural makeup techniques, with a success rate of 98 percent.

For the study ‘Dodging Attack Using Carefully Crafted Natural Makeup’, the team of five used YouCam Makeup, a selfie app to digitally apply makeup to identifiable regions of 20 participants’ faces.

The AI developed a digital heat map of a participant's face, highlighting areas that face recognition systems would likely peg as highly identifiable. A digital makeup projection then indicated where to apply makeup to alter the contours of the person's face. A makeup artist physically recreated the digitally applied, software-generated makeup patterns on participants, but in a naturalistic way.

Participants walked through a hallway videoed by two cameras. In both the physical and digital makeup tests the participants were flagged as blacklisted individuals for the systems to be alert to.

The face biometric system (ArcFace) was unable to identify any of the participants where makeup was digitally applied. For the physical makeup recreation experiment, “the face recognition system was able to identify the participants in only 1.22 percent of the frames (compared to 47.57 percent without makeup and 33.73 percent with random natural makeup), which is below a reasonable threshold of a realistic operational environment,” states the paper.

Participants walked through a hallway videoed by two cameras. In both the physical and digital makeup tests the participants were flagged as blacklisted individuals for the systems to be alert to.

The face biometric system (ArcFace) was unable to identify any of the participants where makeup was digitally applied. For the physical makeup recreation experiment, “the face recognition system was able to identify the participants in only 1.22 percent of the frames (compared to 47.57 percent without makeup and 33.73 percent with random natural makeup), which is below a reasonable threshold of a realistic operational environment,” states the paper.

“[The makeup artist] didn’t do too much tricks, just see the makeup in the image and then she tried to copy it into the physical world. It’s not a perfect copy there. There are differences but it still worked,” said Nitzan Guettan, a doctoral student and lead author of the study.

“Our attacker assumes a black-box scenario, meaning that the attacker cannot access the target FR model, its architecture, or any of its parameters. Therefore, [the] attacker’s only option is to alter his/her face before being captured by the cameras that feeds the input to the target FR model,” according to the research paper.

Adversarial machine learning (AML) attacks have been conducted before. In June, Israeli firm Adversa announced the creation of the ‘Adversarial Octopus,’ a black-box transferable attack designed to fool face biometrics models.

While facial recognition systems have not historically been able to identify those wearing face coverings, the pandemic accelerated the drive to advance this capability. Corsight AI announced in July that the company’s facial recognition system, Fortify, is able to identify individuals wearing motorcycle helmets and face covers at the same time.

Like these kind of feel good stories? Get the Brighter Side of News' newsletter.

Tags: #New_Innovations, #AI, #Facial_Recognition, #Science, #Technology, #Research, #The_Brighter_Side_of_News