First wireless earbuds that clear up calls using deep learning

“ClearBuds” use a novel microphone system and one of the first machine-learning systems to operate in real time and run on a smartphone.

[Aug 3, 2022: Sarah McQuate, University of Washington]

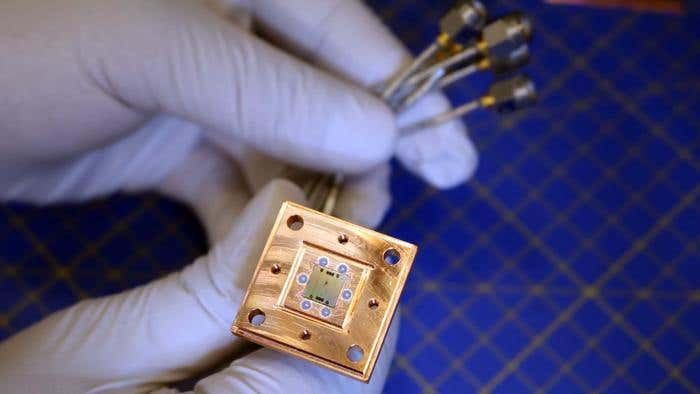

ClearBuds use a novel microphone system and are one of the first machine-learning systems to operate in real time and run on a smartphone. (CREDIT: Raymond Smith/University of Washington)

As meetings shifted online during the COVID-19 lockdown, many people found that chattering roommates, garbage trucks and other loud sounds disrupted important conversations.

This experience inspired three University of Washington researchers, who were roommates during the pandemic, to develop better earbuds. To enhance the speaker's voice and reduce background noise, "ClearBuds" use a novel microphone system and one of the first machine-learning systems to operate in real time and run on a smartphone.

The researchers presented this project June 30 at the ACM International Conference on Mobile Systems, Applications, and Services.

"ClearBuds differentiate themselves from other wireless earbuds in two key ways," said co-lead author Maruchi Kim, a doctoral student in the Paul G. Allen School of Computer Science & Engineering. "First, ClearBuds use a dual microphone array. Microphones in each earbud create two synchronized audio streams that provide information and allow us to spatially separate sounds coming from different directions with higher resolution. Second, the lightweight neural network further enhances the speaker's voice."

While most commercial earbuds also have microphones on each earbud, only one earbud is actively sending audio to a phone at a time. With ClearBuds, each earbud sends a stream of audio to the phone. The researchers designed Bluetooth networking protocols to allow these streams to be synchronized within 70 microseconds of each other.

Related Stories

The team's neural network algorithm runs on the phone to process the audio streams. First it suppresses any non-voice sounds. And then it isolates and enhances any noise that's coming in at the same time from both earbuds—the speaker's voice.

"Because the speaker's voice is close by and approximately equidistant from the two earbuds, the neural network can be trained to focus on just their speech and eliminate background sounds, including other voices," said co-lead author Ishan Chatterjee, a doctoral student in the Allen School. "This method is quite similar to how your own ears work. They use the time difference between sounds coming to your left and right ears to determine from which direction a sound came from."

When the researchers compared ClearBuds with Apple AirPods Pro, ClearBuds performed better, achieving a higher signal-to-distortion ratio across all tests.

Shown here, the ClearBuds hardware (round disk) in front of the 3D printed earbud enclosures. (Credit: Raymond Smith/University of Washington)

"It's extraordinary when you consider the fact that our neural network has to run in less than 20 milliseconds on an iPhone that has a fraction of the computing power compared to a large commercial graphics card, which is typically used to run neural networks," said co-lead author Vivek Jayaram, a doctoral student in the Allen School.

"That's part of the challenge we had to address in this paper: How do we take a traditional neural network and reduce its size while preserving the quality of the output?"

The team also tested ClearBuds "in the wild," by recording eight people reading from Project Gutenberg in noisy environments, such as a coffee shop or on a busy street. The researchers then had 37 people rate 10- to 60-second clips of these recordings. Participants rated clips that were processed through ClearBuds' neural network as having the best noise suppression and the best overall listening experience.

One limitation of ClearBuds is that people have to wear both earbuds to get the noise suppression experience, the researchers said.

But the real-time communication system developed here can be useful for a variety of other applications, the team said, including smart-home speakers, tracking robot locations or search and rescue missions.

The team is currently working on making the neural network algorithms even more efficient so that they can run on the earbuds.

For more science stories check out our New Discoveries section at The Brighter Side of News.

Note: Materials provided above by University of Washington. Content may be edited for style and length.

Like these kind of feel good stories? Get the Brighter Side of News' newsletter.

Joshua Shavit

Science & Technology Writer | AI and Robotics Reporter

Joshua Shavit is a Los Angeles-based science and technology writer with a passion for exploring the breakthroughs shaping the future. As a contributor to The Brighter Side of News, he focuses on positive and transformative advancements in AI, technology, physics, engineering, robotics and space science. Joshua is currently working towards a Bachelor of Science in Business Administration at the University of California, Berkeley. He combines his academic background with a talent for storytelling, making complex scientific discoveries engaging and accessible. His work highlights the innovators behind the ideas, bringing readers closer to the people driving progress.