Breakthrough technology reconstructs speech directly from brain activity

Researchers created and used complex neural networks to recreate speech from brain recordings, promising transformative advancements.

[Oct. 12, 2023: Staff Writer, The Brighter Side of News]

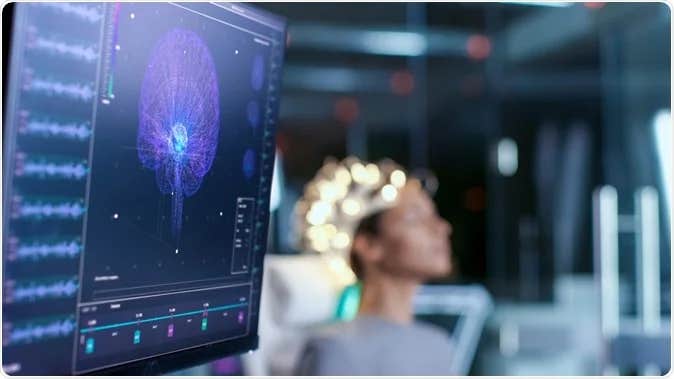

Researchers created and used complex neural networks to recreate speech from brain recordings, and then used that recreation to analyze the processes that drive human speech. (CREDIT: Shutterstock)

Speech production, a symphony of neural complexities, has traditionally kept scientists at a loss for words. But a groundbreaking study from New York University has started to put the pieces together, promising transformative advancements for vocal prosthetics that can restore individual voices.

At the crux of this paradigm shift is the intricate interplay between the neural regions steering muscle actions in our mouth, jaw, and tongue, and the areas interpreting the auditory feedback of our self-generated sounds.

To realize the full potential of future speech-producing prosthetics, understanding and separating these intertwining processes is paramount.

Led by a collaborative team from NYU, the recent research represents a significant stride in this direction, leveraging advanced neural networks to both recreate and then analyze speech from brain signals.

Related Stories:

Adeen Flinker, the Associate Professor of Biomedical Engineering at NYU Tandon and Neurology at NYU Grossman School of Medicine, teamed up with Yao Wang, Professor of Biomedical Engineering and Electrical and Computer Engineering at NYU Tandon, and a prominent figure at NYU WIRELESS. Their compelling results were featured in the renowned Proceedings of the National Academy of Sciences (PNAS) journal.

Human speech, an intricate dance of neural signals, hinges on a dual-control mechanism: feedforward control guiding our motor functions and feedback processing interpreting our self-produced speech. To execute this sophisticated dance, several brain networks must operate in perfect sync. Until now, differentiating between the two, especially concerning the timing and level of brain engagement, remained a challenge.

Using an avant-garde deep learning architecture on human neurosurgical recordings, the research group formulated a unique rule-based differentiable speech synthesizer. This tool enabled them to decode speech from cortical signals. By tapping into neural network blueprints that could differentiate between causal, anticausal, or a blend of both temporal convolutions, the team could discern the precise roles of feedforward and feedback processes in speech production.

Speech decoding framework. The overall structure of the decoding pipeline. ECoG amplitude signals are extracted in the high gamma range (i.e., 70–150 Hz). The ECoG Decoder translates neural signals from the electrode array to a set of speech parameters. (CREDIT: PNAS)

"This approach permitted a clearer understanding of the simultaneous processing of feedforward and feedback neural signals that arise as we speak and perceive our voice's feedback," explained Flinker.

The study's revelations have reshaped conventional beliefs separating feedforward and feedback brain networks. Their analysis exposed a nuanced, interconnected feedback and feedforward processing framework stretching across frontal and temporal brain cortices. This fresh outlook, coupled with their superior speech decoding results, has propelled our comprehension of the multifaceted neural operations governing speech creation.

Comparison of original and decoded speech produced by the model. Spectrograms of decoded (Left) and original (Right) speech exemplar words. (CREDIT: PNAS)

The practical implications of this study are nothing short of revolutionary. Drawing from their findings, the researchers are pioneering vocal prosthetics capable of translating brain activity directly into speech. Many teams globally are venturing down this path, but NYU's model boasts an unparalleled edge. Their device can meticulously replicate the patient's unique voice based on minimal voice recording data, such as snippets from YouTube videos or Zoom calls.

To acquire the vital data that underpinned their research, the team collaborated with patients suffering from refractory epilepsy, a condition resistant to conventional medication. These patients, equipped with a grid of subdural EEG electrodes to monitor their condition for a week, generously agreed to additional micro-electrodes to aid the research. Their contribution afforded the researchers an invaluable window into the neural intricacies of speech generation.

Averaged signal of input ECoG projected on the standardized MNI anatomical map. The colors reflect the percentage change of high gamma (250 to 750 ms relative to speech onset) during production compared to the baseline level during the prestimulus baseline period. (CREDIT: PNAS)

The extensive team that championed this venture encompasses Ran Wang, Xupeng Chen, and Amirhossein Khalilian-Gourtani from NYU Tandon’s Electrical and Computer Engineering Department, Leyao Yu of the Biomedical Engineering Department, Patricia Dugan, Daniel Friedman, and Orrin Devinsky from NYU Grossman’s Neurology Department, and Werner Doyle from the Neurosurgery department.

The promise of returning a patient's unique voice after a loss is monumental. It isn't merely about restoring the ability to speak; it's about returning a piece of identity. With pioneers like Flinker and Wang at the helm, the horizon appears brighter for countless individuals hoping to rediscover their voice.

For more science and technology stories check out our New Discoveries section at The Brighter Side of News.

Note: Materials provided above by The Brighter Side of News. Content may be edited for style and length.

Like these kind of feel good stories? Get the Brighter Side of News' newsletter.