AI models now show higher emotional intelligence than humans, surprising psychologists

Large language models can write poetry, solve complex math problems, and even help diagnose diseases. But can they really understand emotions?

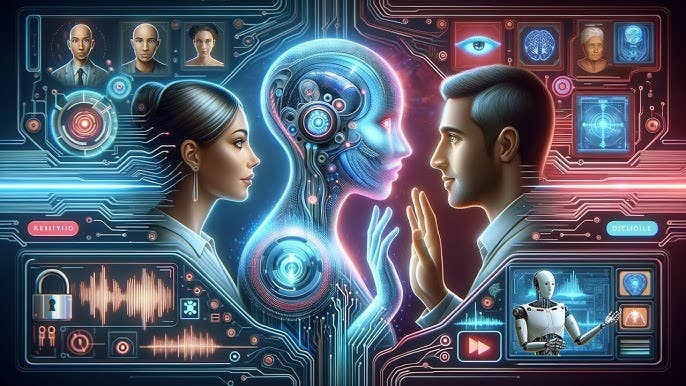

Artificial intelligence models now show higher emotional intelligence than humans in tests. (CREDIT: AI generated / CC BY-SA 4.0)

Large language models can write poetry, solve complex math problems, and even help diagnose diseases. But can they really understand emotions? New research from the University of Bern and the University of Geneva suggests that these powerful artificial intelligence systems may do just that—and possibly better than most people.

Testing Machines on Human Emotions

In a recent study published in Communications Psychology, researchers evaluated six of the most advanced language models: ChatGPT-4, ChatGPT-o1, Claude 3.5 Haiku, Copilot 365, Gemini 1.5 Flash, and DeepSeek V3. These AI systems were put through five tests often used in psychology and workplace assessments to measure emotional intelligence, or EI.

These tests involve tricky, real-life scenarios. One example: A colleague steals credit for Michael’s idea and is being praised unfairly.

What's the smartest response?

- Argue with the colleague

- Talk to his superior

- Resent him in silence

- Take revenge by stealing an idea back

The correct answer, according to psychologists, is "Talk to his superior". It reflects a healthy, constructive way to deal with emotional tension at work.

The AIs nailed it. Across the five tests, they averaged an accuracy of 81%—much higher than the 56% average among human participants.

“We chose five tests commonly used in both research and corporate settings,” said Katja Schlegel, a lead psychologist at the University of Bern. “They involved emotionally charged scenarios designed to assess the ability to understand, regulate, and manage emotions.”

Related Stories

Marcello Mortillaro, a senior scientist at the University of Geneva’s Swiss Center for Affective Sciences, added, “This suggests that these AIs not only understand emotions but also grasp what it means to behave with emotional intelligence.”

Why Emotional Intelligence Matters

Being emotionally intelligent means knowing how to handle your own feelings and how to respond to the emotions of others. It plays a huge role in everyday life. People with strong emotional intelligence tend to build better relationships, perform well at work, and stay mentally healthier.

For instance, in professional settings, individuals who regulate emotions effectively are often seen as more competent and approachable. On the other hand, poor emotional management can lead to workplace conflict, isolation, and even depression.

That’s why embedding emotional intelligence into artificial intelligence—especially chatbots, digital assistants, and healthcare tools—is becoming a priority. Known as affective computing, this area of research aims to give machines the ability to read and respond to human feelings.

Since the 1990s, when Rosalind Picard first introduced the idea of machines with emotion, AI systems have gotten much better at recognizing emotional cues. They can now analyze tone of voice, facial expressions, and word choices with accuracy that matches, or sometimes exceeds, human judgment. Applications already exist in healthcare, education, and even mental wellness apps.

From Recognizing Emotions to Understanding Them

Despite this progress, most AI tools are still limited to narrow tasks. They can identify if a user sounds sad or stressed but often don’t know what to do next. That’s where emotional intelligence comes in—not just recognizing emotions, but reasoning about them and responding wisely.

Schlegel and her colleagues wanted to know: Could today’s most advanced AIs go beyond detection and actually think about emotions the way people do?

To test that, they selected five different assessments. Two of them focused on understanding emotional causes and effects. Three others looked at how to regulate emotions—either your own or someone else’s. The questions were designed with realistic workplace and everyday life scenarios.

The LLMs didn’t just score well—they often outperformed human benchmarks. Their answers were not only accurate but also demonstrated an understanding of context and emotional complexity.

This is not entirely surprising. Earlier studies showed that ChatGPT-3.5, for example, scored above average on the Levels of Emotional Awareness Scale, which asks participants to describe how characters in short stories would feel in different situations.

Teaching the Test—and Writing It Too

After proving they could solve emotional intelligence tests, the next step was more ambitious. Could an AI actually create one?

Using ChatGPT-4, researchers generated entirely new tests. These included fresh scenarios, response options, and emotional challenges. Then, over 460 human participants were recruited to try out the new AI-created versions alongside the originals.

The outcome? The new versions were just as clear, believable, and well-balanced as the ones developed by psychologists over years. Participants rated the AI-generated items highly in clarity, realism, and emotional depth. They performed similarly in difficulty and consistency as the originals.

Importantly, the statistical differences between the AI-created and original tests were minimal. None of the comparisons showed more than a small effect size. And in terms of overall performance and validity, the new items held up.

“This reinforces the idea that LLMs, such as ChatGPT, have emotional knowledge and can reason about emotions,” said Mortillaro.

Beyond the Lab: Real-World Potential

What does all this mean outside of research labs?

First, it opens the door for AI tools that can coach users through tough emotional situations. Imagine an app that helps you navigate a workplace dispute or manage test anxiety—not just by offering stock advice, but by understanding your feelings and suggesting tailored responses.

Second, it supports the development of emotionally intelligent virtual tutors, therapists, or even managers. These agents wouldn’t just follow scripts. They could adjust based on emotional cues, just as a thoughtful teacher or counselor would.

Of course, these tools aren’t perfect or ready to replace real human empathy. But they’re getting closer to something previously thought to be uniquely human.

Some experts argue this shows promise for artificial general intelligence, or AGI. That’s the dream of a system that can think, learn, and act across many domains—emotional, logical, and social. While today’s models aren’t there yet, their ability to handle emotional tasks hints at what could come.

Still, Schlegel and Mortillaro emphasize that human oversight remains essential. These systems should support human decision-making, not replace it. Used wisely, though, emotionally intelligent AI could play a powerful role in education, mental health, and even conflict resolution.

A Brave New AI Era

As AI continues to grow in capability, questions about what it should do are becoming just as important as what it can do. Emotional intelligence offers one answer—tools that understand not only what you're saying, but how you feel.

The recent findings show that the boundary between machine intelligence and human emotional understanding is starting to blur. With careful development and responsible use, LLMs may soon become trusted partners in the most human of tasks—navigating emotion.

Note: The article above provided above by The Brighter Side of News.

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Joshua Shavit

Science & Technology Writer | AI and Robotics Reporter

Joshua Shavit is a Los Angeles-based science and technology writer with a passion for exploring the breakthroughs shaping the future. As a contributor to The Brighter Side of News, he focuses on positive and transformative advancements in AI, technology, physics, engineering, robotics and space science. Joshua is currently working towards a Bachelor of Science in Business Administration at the University of California, Berkeley. He combines his academic background with a talent for storytelling, making complex scientific discoveries engaging and accessible. His work highlights the innovators behind the ideas, bringing readers closer to the people driving progress.